Many organizations around the world are adopting GenAI technologies in their workflow to try to reap productivity gains within their teams and to achieve business outcomes that propel business growth. Given the nature of the GenAI systems, trust has become more important in the current era to ensure reliability and transparency in how they work and generate a response to human textual input. GenAI hallucination problems are constraining many organizations from deploying GenAI systems at scale.

Technical writers have a huge role in the GenAI era in ensuring trust in GenAI system-generated responses. Technical writers can produce GenAI-friendly content, help train the GenAI systems to produce the right responses based on human feedback, and also evaluate the responses of the GenAI system before deploying it in the production environment.

Things to Consider in Evaluating GenAI Responses

1. Relevancy

The GenAI-generated response should be relevant to the customers’ questions/prompts. The generated response will be relevant if the underlying retrieved mechanism retrieves relevant chunks from the knowledge base. Thus, it is important to look at evaluation metrics about relevancy

2. Accuracy

Trust is fundamental in ensuring the adoption of GenAI-based agents. Accuracy plays a crucial role in evaluating the GenAI response. Accuracy metrics can be computed by comparing the GenAI response with the ground truth

3. User Feedback

User feedback plays another important role in trust. If GenAI responses are not relevant or non-factual, users can flag them for inaccuracy. This should be considered to retrain the GenAI-based agent to produce accurate responses over time

4. Error Handling

If GenAI responses cannot be generated, the response should be courteous

5. Response Time

User experience is affected by response time. If the response time is longer, then the user has to wait and they might abandon using GenAI-based agents. A typical balance has to be attained between user experience, cost, and accuracy.

Also, Check out: What are the responsibilities for technical writers in the GenAI era?

Framework to Evaluate GenAI Responses

Technical writers are best suited to evaluate the responses generated by GenAI-based assistive search as they curate accurate information across the organization and interact with many subject matter experts. The responses from GenAI-based assistive search are very subjective; thus, it is important to create some baseline around GenAI-based assistive search responses through some numerical metrics.

These metrics can guide improvising responses by either tweaking the underlying content or tweaking the GenAI-based assistive search tool’s functional parameters, such as system messages, chunk size, etc. It’s crucial to formulate a diverse range of questions encompassing themes like “What,” “How,” and “Yes/No” to assess the effectiveness of GenAI-generated responses. Alongside these questions, consider adding tricky ones that might challenge the GenAI’s ability to respond accurately, as well as questions for which answers aren’t readily available in your knowledge base content.

Two open-source frameworks are available to evaluate the responses generated by GenAI-based assistive search.

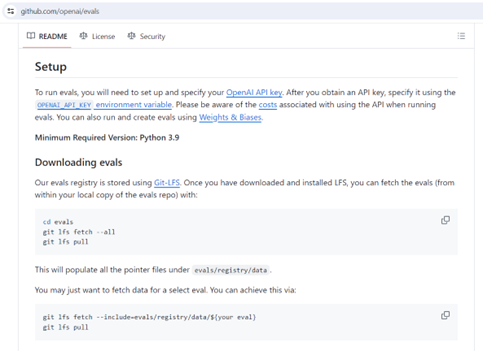

1. OpenAI Evals

OpenAI champions Evals framework and has been released as an open-source Github project. OpenAI’s evaluation framework lets you customize templates that align with your unique use cases, streamlining your workflow. This Github project can be forked to evaluate the GenAI responses for your knowledge base. For each question, you can generate a human response and compare it with the GenAI-based assistive search tool’s response. Then, metrics can be generated based on the following criteria

- Check if any of the human-generated response starts with a GenAI-generated response

- Check if any of the human-generated responses contain GenAI-generated response

- Check if any of the human-generated responses contains any of the GenAI-generated response

Image Source: GitHub

Scoring can be done, and appropriate metrics can be calculated using the OpenAI Evals Github repo. Technical writers shall build a large corpus of questions and ground truth. This corpus can be used iteratively when changes to underlying content happen, or a new version of the GenAI-based assistive search tool is released.

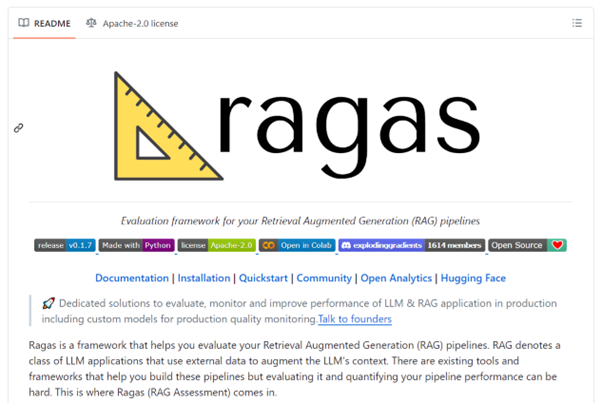

2. RAGAS Framework

RAGAS Framework focuses on assessing the quality of how GenAI-based assistive search retrieves content from your knowledge base and generates a response to the prompt (question). For evaluation purposes, the metrics that are of importance are

- Faithfulness – Measure of the factual consistency of the generated response against the given context

- Answer relevancy – A measure of how relevant the answer is to the prompt (question)

- Answer semantic similarity – To measure the semantic resemblance between the generated response and the ground truth

- Answer Correctness – To measure the accuracy of the generated response when compared to the ground truth

This framework also produces a single metric that takes a harmonic mean of all metrics so that it can be used to evaluate different scenarios and test cases.

Image Source: GitHub

Open Evals framework is simple to use, while the RAGAS framework has a lot of metrics to suit your business use cases. Depending on the use cases, technical writers can opt for either of these frameworks to ensure accurate and reliable responses to the GenAI-based assistive search. As customers are giving feedback on the GenAI-generated response, performing analysis on the questions and customer feedback is important for improving the trustworthiness of your GenAI assistive search engine. GenAI assistive search analytics can provide a lot of insight into improvising the knowledge base content.

Improving Trust

Evaluating the GenAI system responses for a set of questions and comparing them with ground truth provides valuable insight into the reliability of the GenAI systems such as assistive search, chatbots, etc. For your business use case, technical writers can collate more questions/prompts over time and build a resilient test bed to evaluate GenAI systems. This helps to build trust in the GenAI system and drives customer adoption over time. Thus, technical writers have a huge responsibility in their portfolio to build trust in GenAI systems leading to customer adoption. These activities can be attributed to change management and product marketing practices.

Closing Remarks

This is the prime time for technical writers to pick up new skills in evaluating responses to the GenAI-based assistive search capability. More importantly, understanding evaluation metrics and relentless focus on improving the trustworthiness of the GenAI-based system should help technical writers quantify the value of their effort in driving the adoption of these technologies in their knowledge base platforms. Technical writers can also advocate best practices in terms of evaluating GenAI responses to their community members for wider adoption of these practices.

An intuitive knowledge base software to easily add your content and integrate it with any application. Give Document360 a try!

GET STARTED

–

–