Representation of complex information can be done effectively through text in the form of tables. The table provides an elegant way to condense a lot of information and present it in a visually appealing way. Tables are a natural form to articulate complex informational structures to the end users without any need for media artifacts. Technical writers use tabular data to understand the key points, trends, trends, similarities, and relationships. These data allow them to analyze and construct documents. The technical writing world has adapted the use of tables during the content creation process.

Overview of Table formats

Table content is organized in the form of rows and columns. The table usually contains header information that gives a context to values present in each table cell. Any simple table can be refactored into ordered hierarchies, leading to a multi-dimensional table. Much of the structured information in the world is organized in table form. One of the simplest use cases for tables is the table of contents, which summarizes the whole knowledge base in the form of indexes and themes. Sometimes, the technical writer prefers a row table or column table depending on the type of content and also UX interface limitations. Column tables are preferred for mobile devices, while row tables are preferred for desktop devices.

Large Language Model Challenges

LLMs are trained on a vast corpus of text data from the internet. The text data are unstructured, and the structural nature of tables poses unique challenges to Large Language Models (LLMs). LLM is designed to process and parse massive amounts of unstructured textual data; it confronts a paradigm shift when facing tabular data. Another layer of complexity is numerical reasoning and aggregation over tabular data, which often contain a dense mix of numerical and textual data. This data can risk overshadowing crucial details, potentially impeding the LLM’s decision-making abilities. Although technical writers prefer to present a certain set of information in the form of tables, the impediment of the LLMs poses challenges on how this tabular information is used to generate responses to customers’ questions (prompts). To help LLMs understand structured information, tables must be refactored.

Refactoring tables for LLMs

The tables inside your knowledge base content need to be refactored to make it suitable for ingestion by business applications powered by Large Language Models (LLMs). The following are some of the best practices to be followed while refactoring the table

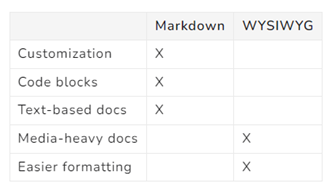

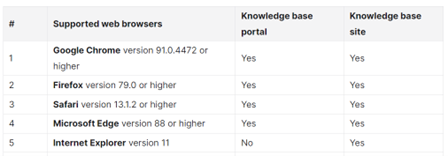

- Do not use symbols inside the table content as they are removed during pre-processing steps

- Do not have null values / empty spaces inside your table content as GenAI-based agents might hallucinate while trying to use those data!

- Ensure that tables have header information along with proper rows

- If you wish to have some binary information part of the table content, use Yes/No, True/ False, or any other option. Ensure that this information is covered in the system message of your RAG (Retrieval Augmented Generation) tool

- The table should be complete such that all cells have values in them

- Table cell values can be a mix of numeric values and text. However, it is recommended to have one type of data present inside those table cells

Evaluation of tables for GenAI-based agents

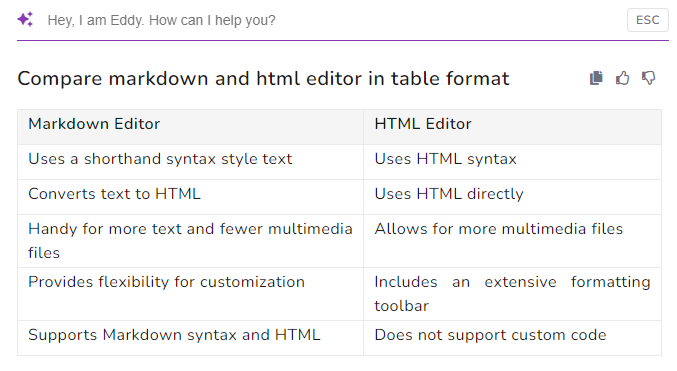

Parsing of data to LLMs can be in markdown format or HTML tags. The markdown format has limitations in terms of articulating header information and multiple dimensions of the table. The HTML tags offer flexibility but come at the cost of a token budget imposed by the LLM APIs. It is recommended to avoid tables as state-of-the-art (SOTA) LLM technology only produces 74% accurate results based on the table content for user’s prompts (questions). Technical writers should articulate complex information in the form of text rather than tables. Producing content in table format (in output/response) is now the choice of the user, not the content producer. Thus, a technical writer needs to trade these tables to provide more freedom to the end user.

The LLMs can produce a response in the table format. If your organization has a specified structure of the table, either the LLMs can be fine-tuned with your organizational table format, or clever prompt engineering from end users can also help. Technical writers must evaluate the generated responses from the GenAI-based agent based on the table content for specific user questions (prompts). OpenAI Evals, RAGAS, and Galileo labs frameworks provide a base for assessing the quality of the generated response.

Conclusion

It is important to know that tables provide an elegant way to articulate complex information. It is recommended to avoid adding tables to your knowledge base content. In refactoring tables in certain circumstances, following the proposed guidelines will help the current LLM technology providers generate more accurate responses based on the table content. Given that GenAI-based agents will act as an interface between your end users and your content, it is important to let the end users have the freedom to choose which format they want the response to be.

An intuitive knowledge base software to easily add your content and integrate it with any application. Give Document360 a try!

GET STARTED

–

–