Chatbots powered by GenAI technologies are increasingly prominent in many documentation platforms. Customers who have prompt engineering knowledge are asking better questions in a chatbot and getting an accurate response. This shows a paradigm shift in how customers interact with documentation sites. Moreover, customers prefer self-service over waiting for a response from a customer support agent. Nowadays, customers are interested in accomplishing tasks rather than doing an activity by themselves.

AI agents can plan a task, make decisions, and execute a set of actions autonomously. They are gaining traction in many domains and are very relevant for technical writing practice. For example, technical writers can use a writing agent, meeting notes agent, and so on to accomplish some of their goals. Given that AI agents. To build trust in Chatbot responses and AI agents’ accomplishment of a goal, it is very important to monitor activities taken by AI in generating a response. This is attributed to the explainability of AI and helps to audit AI. LLM observability is a practice that helps to monitor AI and comply with AI regulations around the world for auditability and accountability.

What is LLM Observability?

In traditional machine learning, there is a practice called MLOps whereby once the AI model is deployed, all model settings, inputs and outputs are logged into a system. This logged data is used to monitor any model drift, data drift, and so on. This also helps to prioritize retraining the existing model with new data. In the GenAI world, the equivalent of MLOps is LLMOps, which is generically called LLM Observability. LLM observability helps data scientists to log all aspects of GenAI system, called traces, in scenarios such as

- Monitor content retrieval, prompts, reranker outputs, and third-party LLM to generate a response in a RAG system.

- Understand the latency between different components in the RAG system.

- Understand how response is generated in simple prompt engineering tasks such as summarizing, and so on.

- Understand interactions inside AI agents.

- Outputs and inputs of the GenAI system are to be logged for compliance.

LLM observability is essential for tracking how GenAI produces certain outputs and responses. Unless we track, we cannot make changes to GenAI system components to alter its future behavior. For example, if a chatbot produces an inaccurate response due to hallucinations, it is important to log those responses to change its behavior. This can be done through fixing system instructions, upgrading to a new LLM model or fixing content retrieval. LLM Observability gives us an indication of where to apply the fixes.

LLM Observability logging and insights

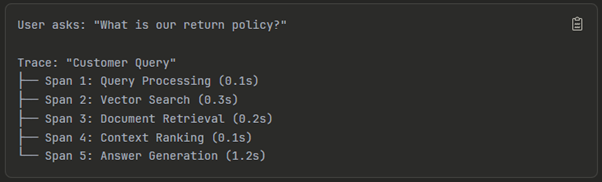

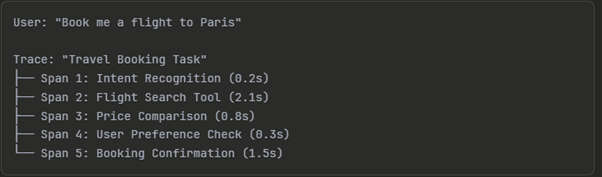

LLM Observability works based on logging data. The individual log of data is called a span, and a collection of spans is called a trace. A trace provides a comprehensive view of data collection throughout multi-step workflows. Tracking granular activities inside GenAI apps depends on your use case and what needs to be optimized. For example, suppose you want to understand the latency of your GenAI system. In that case, it is advisable to track every component activity as a span, such that a holistic system view can be gained.

These are some of the tools that should be part of the LLM Observability toolkit

- Latency & Performance Metrics

- Token Usage & Cost Tracking

- Hallucination & Factuality Detection

- Prompt & Response Logging

- Evaluation Metrics

- Tracing & Dependency Tracking

- Error Detection

- Safety & Compliance Checks

- Explainability & Debugging Aids

Here is an example of a trace in a Chatbot system:

Schedule a demo with our experts to see how LLM Observability improves accuracy, compliance, and performance

Book A Demo

Trace in the RAG system

A trace can be generated for the complete RAG system from user prompt to response generation. Individual steps in the workflow can be logged as a span. A typical trace looks like

Using a large number of traces, it is possible to identify and fix

- Retrieval quality

- Context window problems

- Slow performance bottlenecks

- Embedding drift

In this case, LLM observability can help to improve response quality and optimize latency for a better customer experience.

Trace in the AI Agent system

A trace can be generated for each agentic workflow call, thus giving a granular view of the working of each component in the agentic system. A typical travel AI agent trace would look like

Using many traces, it is possible to identify and fix

- Tools selection problem

- Infinite loops

- Poor planning

- Context loss

In this case, LLM observability can help to improve workflow optimization, prompt engineering, and agent behavior tuning, leading to increased efficiency of the AI Agent’s performance.

Compliance with the EU AI Act

As per the EU AI Act, one of the key requirements of medium and low-risk AI systems is transparency and documentation.

- The AI system provider must document the AI system capabilities, limitations, and its performance. The LLM observability tool helps with automated logging of LLM behavior and the LLM decision-making process, along with performance metrics.

- The AI system provider must continuously monitor the AI system for risks and harmful outputs. The LLM observability tool helps with bias detection, safety monitoring, and unusual behavior.

- LLM Observability helps AI systems with data governance in terms of data quality tracking, understanding data provenance, and data bias detection.

- Data traces help with the explainability of why and how the AI system provides responses in a certain way

- This also helps with incident reporting

Your Next Step: Invest in LLM observability

Investing in LLM observability is a strategic initiative for any organization working with a GenAI system. LLM observability helps address core challenges, such as understanding the non-deterministic behavior of chatbots, auditing complex multi-step workflows, quality assessment of chatbot responses, and mitigating security risks. The ability to trace every aspect of a GenAI system improves quality and reliability by up to 40% in real-time scenarios. As GenAI systems become complex, LLM observability provides a solution to maintain regulatory requirements for compliance with international law.

–

–